Enterprises are increasingly deploying LLMs across multiple cloud providers to balance performance, resilience, and cost.

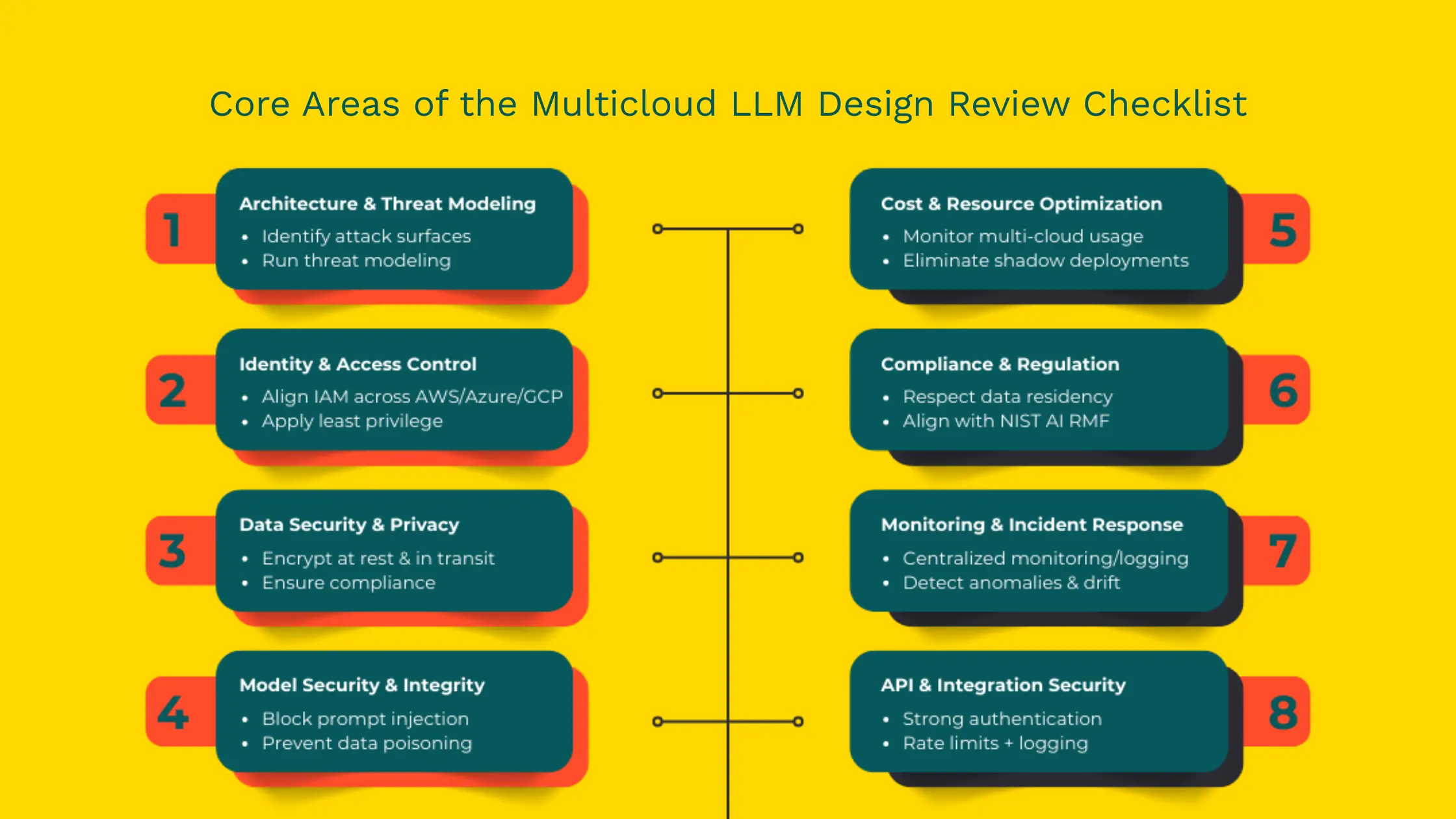

But running Secure Multicloud LLMs reliably requires more than lifting and shifting workloads. It demands a deliberate Design review checklist that enforces consistent controls across heterogeneous environments.

A single cloud provider may not always meet business needs for latency, cost optimization, or data residency. However, deploying LLMs in a multi-cloud setting introduces additional complexity: data must flow securely across providers, identity and access controls must remain consistent, and compliance needs must be upheld everywhere the model operates.

Misconfigurations, integration flaws, or overlooked design weaknesses can quickly lead to data leakage, compliance violations, or costly breaches.

That’s why a formal Design review checklist and a focus on multicloud LLM security are essential for any organization running production LLMs.

Below are the frequent issues enterprises encounter and why they matter:

Every cloud uses different identity primitives. Without a unifying cloud design review and role mapping, service accounts proliferate, privileges diverge, and lateral movement risks increase.

Adopt a unified identity strategy (federated SSO, short-lived credentials, centralized entitlements) as part of your Design review checklist.

Data silos across providers lead to inconsistent encryption, backup, and retention policies. Validate that data transfers follow your cloud design review rules for encryption-at-rest, TLS enforcement, and centralized key management to meet regulatory needs.

APIs, connectors, and orchestration tools behave differently per cloud. Reconciling authentication methods, logging formats, and rate limits is a core part of multicloud LLM security.

A poor Design review checklist allows deep coupling to proprietary APIs, making migrations costly. Design for portability (containerization, model registries, abstraction layers) to preserve agility.

Map data flows across providers, pinpoint lateral and external attack surfaces, and run threat models that include LLM-specific attacks (prompt injection, model exfiltration, poisoning). This step is the foundation of multi-cloud LLM security.

Adopt Zero Trust across clouds. Enforce short-lived credentials, consistent role mapping, and fine-grained entitlements so the model can’t be misused by privileged accounts. This should be documented in your cloud design review.

Standardize encryption (AES-256 at rest, TLS 1.2+/TLS 1.3 in transit). Use centralized KMS or key escrow strategies to manage keys consistently across providers. For regulated workloads, include data residency rules and audit trails as mandatory checklist items.

Treat every API as a full attack surface: require OAuth2 or mTLS, centralize gateways, harmonize rate limits, and ensure consistent logging. Make these controls explicit in your Design review checklist.

Monitor GPU/TPU usage across vendors, define provisioning policies, detect shadow deployments, and enforce budget alerts. Cost governance should be a recurring line item in your cloud design review.

Map workflows to GDPR, HIPAA, PCI-DSS, and local residency rules. Ensure audit logs, data retention policies, and access logging are consistent across providers.

Centralize logs and set up cross-cloud analytics to detect unusual inference patterns (possible prompt injection or excessive queries). Define runbooks for model leaks, poisoned pipelines, and compromised API keys.

Use this as part of the formal Design review checklist for every multicloud LLM deployment:

Multicloud strategies are becoming essential for scalability and resilience. Yet with this opportunity comes significant complexity. Data must flow securely across providers and models must be protected against both traditional cyber threats and AI-specific attacks.

A structured design review checklist provides the roadmap organizations need to deploy LLMs with confidence. Skipping these steps risks turning innovation into liability.

Our approach integrates security at every layer of your multicloud LLM ecosystem. The result is trust, resilience, and the freedom to innovate without compromise.

Talk to ioSENTRIX today and let our experts help you design, test, and secure your LLM deployments against tomorrow’s threats.

Contact us: [email protected]

Call us: +1 (888) 958-0554

A design review ensures that multicloud LLM deployments are secure, compliant, and cost-efficient. It identifies risks like misconfigured APIs, inconsistent IAM policies, and data leakage before they reach production.

The main risks include data leakage, prompt injection, data poisoning, misconfigured APIs, and weak access controls. These threats can expose sensitive information or compromise model integrity if not addressed during the design phase.

Zero Trust ensures that every request to the LLM is authenticated and authorized, regardless of origin. This prevents lateral movement across clouds and limits the impact of compromised accounts.

Cost control requires monitoring usage across providers, eliminating shadow deployments, and enforcing governance policies. A design review helps ensure resource efficiency and long-term cost predictability.

ioSENTRIX secures LLM deployments through penetration testing, red teaming, secure SDLC reviews, and full-stack security assessments. Our proactive approach integrates security into the design phase to ensure resilience, compliance, and trust.